Capture your Kinesis Stream Analytics Events in Treasure Data

With data-driven companies like Coinbase, Dash and Supercell all getting on board with Amazon Kinesis for real-time delivery of in-app data, handling billions of events per day, we can imagine why you too might want to integrate AWS with Treasure Data’s analytics pipeline. Indeed, both Amazon Kinesis and Treasure Data are fast-growing and complementary parts of the big data landscape in 2016.

Here are just a few use cases these cutting-edge companies are using Kinesis for:

Coinbase:

“..created a streaming data insight pipeline in AWS, with real-time exchange analytics processed by an Amazon Kinesis managed big-data processing service.” [1]

Dash:

“…uses Amazon Kinesis to collect vehicle and location data every 1-4 seconds from each car, ingesting a total of over 1 TB of data per day from thousands of individual vehicles.” [2]

Supercell:

“Supercell is using Amazon Kinesis for real-time delivery of in-game data, handling 45 billion events per day.” [3]

While Fluentd additionally offers a powerful way of streaming data into Treasure Data, working with Kinesis and Lambda functions offers data scientists and data engineers familiar tools and a very short learning curve if you’re already familiar with AWS and its ecosystem. Certainly much simpler than using an ETL! In fact, we think of these as part of a shortcut path from insights to value.

Using Amazon Web Services’ Kinesis and Lambda functions as a means of data ingestion, we’ll show you an easy way to integrate them.

To get started, you’ll need a few things:

- A basic knowledge of Treasure Data;

- Your Treasure Data Master API key, available from your Treasure Data Profile;

- Basic AWS knowledge, including an AWS account configured with all necessary keys and IAM credentials.

Step 1: Set up your Kinesis Stream

In your AWS Management Console, go to ‘Analytics’ and click ‘Kinesis’. Go to ‘Kinesis Streams’ and click ‘Create Stream’.

Give your Kinesis Stream a name and specify ‘1’ as the Number of Shards. Then Click ‘Create’.

Step 2: Set up your AWS Lambda function

We’ll use AWS lambda as part of our ingestion pipeline. AWS Lambda lets you run code in response to triggers from Amazon Kinesis.

In your AWS Management Console, go to ‘Compute’ and click ‘Lambda’. Click ‘Get Started Now’.

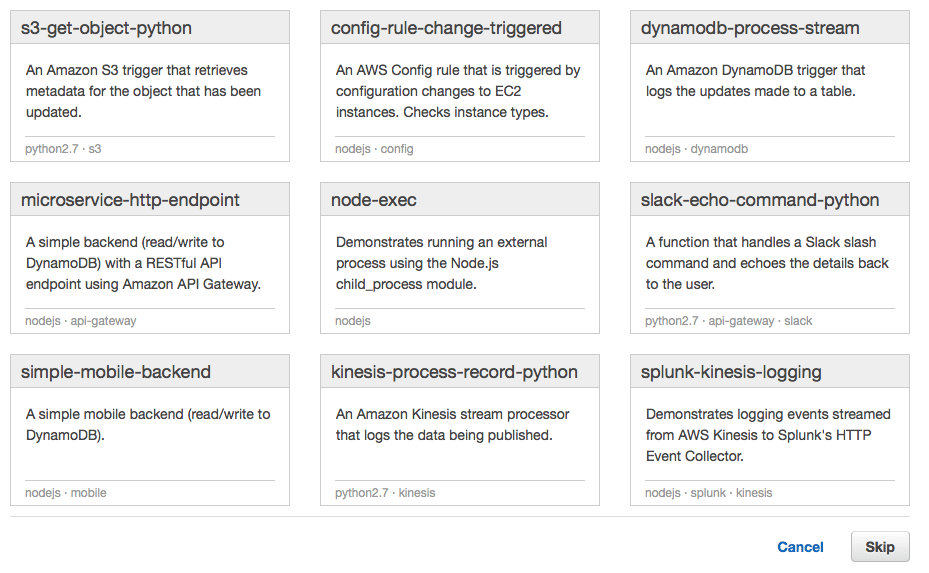

- On the first screen, select ‘kinesis-process-record-python’.

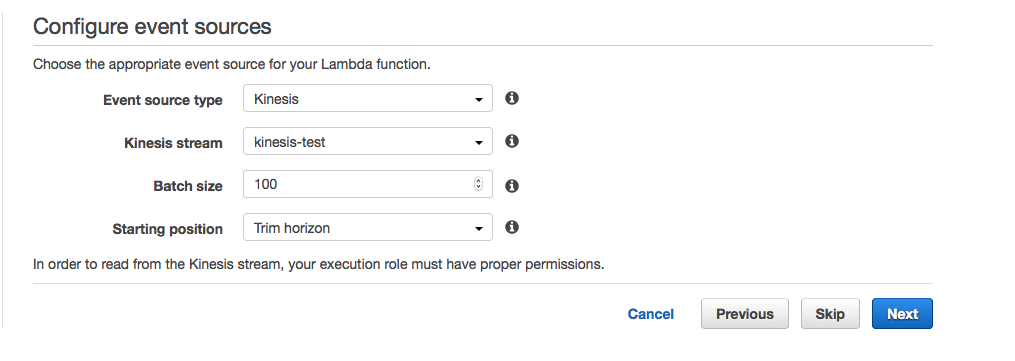

- On the second screen, select ‘Kinesis’ as your event source type, and select the Kinesis stream you previously configured.

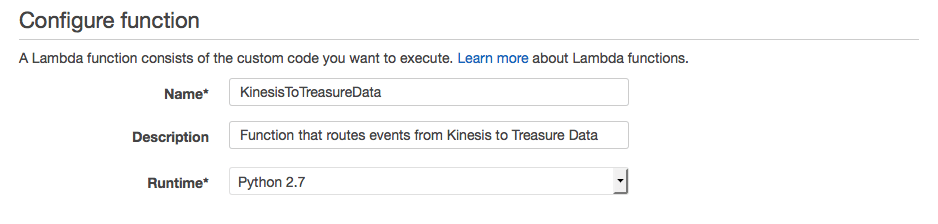

- On the next screen, you’ll configure your actual function. Specify ‘Name’, ‘Description’ and choose ‘Python 2.7’ as ‘Runtime’:

- Next, copy and paste the function below into the ‘Lambda function code’ window, specifying ‘td_master_key’ variables (NOTE: for our purposes today, we’ll name our ‘td_database’ and ‘td_table’ to ‘kinesis’ and ‘events’ respectively. You can change these table names as you see fit.)

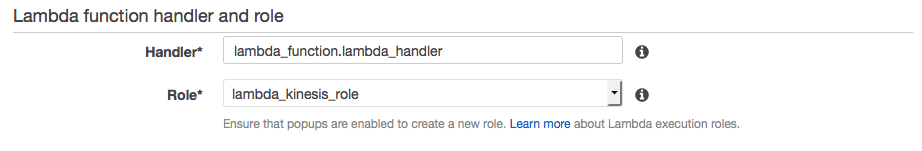

- Specify a Kinesis execution role from the ‘Role’ drop down menu. This will pop up a second IAM window. In this window, select the defaults and click ‘Allow’. This should populate the field with ‘lambda_kinesis_role’.

- Accept other defaults and click ‘Next’.

- Review your function and hit ‘Create function’.

Step 3: Test your Lambda function

Now that it’s configured, you can test the function. From ‘Actions’ – > ‘Configure test event’, paste the following code, and then click ‘Save and test’:

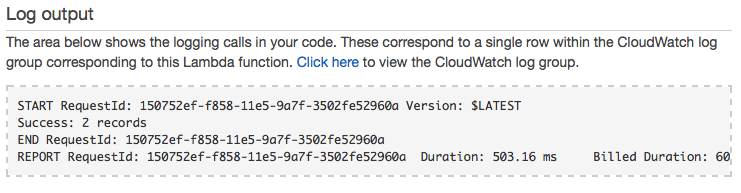

If everything worked, you should see the following in the ‘log output’ section:

Step 4: Confirm Data Upload to Treasure Data

After 1-3 minutes, your data should be ingested into Treasure Data. Unlike with a traditional ETL, Treasure Data is schema-on-read, so you should encounter no schema incompatibility issues during the import! In this example, we’ve named our database kinesis and our table events, and can now view the database in our Treasure Data databases window:

Lastly, you can go to your query window to query the data. Since this comes with a record body of {“field1”: 2, “field2”: “b”}, with base64 encoding, this is what should appear in a simple select * from events query:

Click the GIF to view the animation.

Today we showed you our Amazon Kinesis integration, but our universal data connectors can route almost any data source to almost any data destination. Watch this blog weekly for more of our integrations.

What’s next?

Want to get the best out of your data analytics infrastructure with a dead simple setup and minimal expense? Try out Treasure Data, or request a demo!